Understanding Graph Neural Networks: The Future of Learning from Graph Data

Over the past decade, neural networks have revolutionized the way we process structured data, particularly in the realms of images and text. Popular models such as convolutional networks, recurrent networks, and autoencoders have demonstrated remarkable performance on data arranged in tabular formats like matrices or vectors. However, as we delve deeper into the complexities of data, we encounter a significant challenge: unstructured data, particularly graph data. This raises an important question: Is there a model capable of efficiently learning from graph data? The answer lies in the innovative realm of Graph Neural Networks (GNNs).

The Rise of Graph Neural Networks

Graph Neural Networks were first introduced in 2005, but it is only in the last five years that they have gained substantial traction in the machine learning community. GNNs are designed to model the relationships between nodes in a graph, producing numerical representations that encapsulate the structure and features of the graph. The significance of GNNs cannot be overstated, as many real-world datasets can be represented as graphs. From social networks and chemical compounds to transportation systems and knowledge graphs, the applications of GNNs are vast and varied.

The Core Problem: Mapping Graphs to Labels

To understand how GNNs function, let’s define a fundamental problem: mapping a given graph to a single label, which can represent a numeric value, a class, or any other form of output. In mathematical terms, we seek a function ( F ) such that:

[ F(\text{Graph}) = \text{embedding} ]

For instance, consider a scenario where each graph represents a chemical compound, and the label indicates the likelihood of that compound being effective in drug production. If we can extract meaningful labels from each graph, we can effectively predict which molecules are most promising for pharmaceutical applications. This capability is not just fascinating; it has the potential to accelerate drug discovery and development.

Leveraging Recurrent Neural Networks

Interestingly, we already possess a type of neural network that can operate on graphs: recurrent neural networks (RNNs). RNNs can process sequential data, which can be conceptualized as a special type of graph known as a chained graph. In this context, time series data can be viewed as a series of nodes (timestamps) connected in a linear fashion.

By extending this idea, we can construct a network where each graph node is represented by a recurrent unit (such as an LSTM), and the information from each node is treated as an embedding that travels through the graph. This approach ensures that information is preserved as it moves through the network, similar to how RNNs are employed in natural language processing tasks like language translation.

The Architecture of GNNs

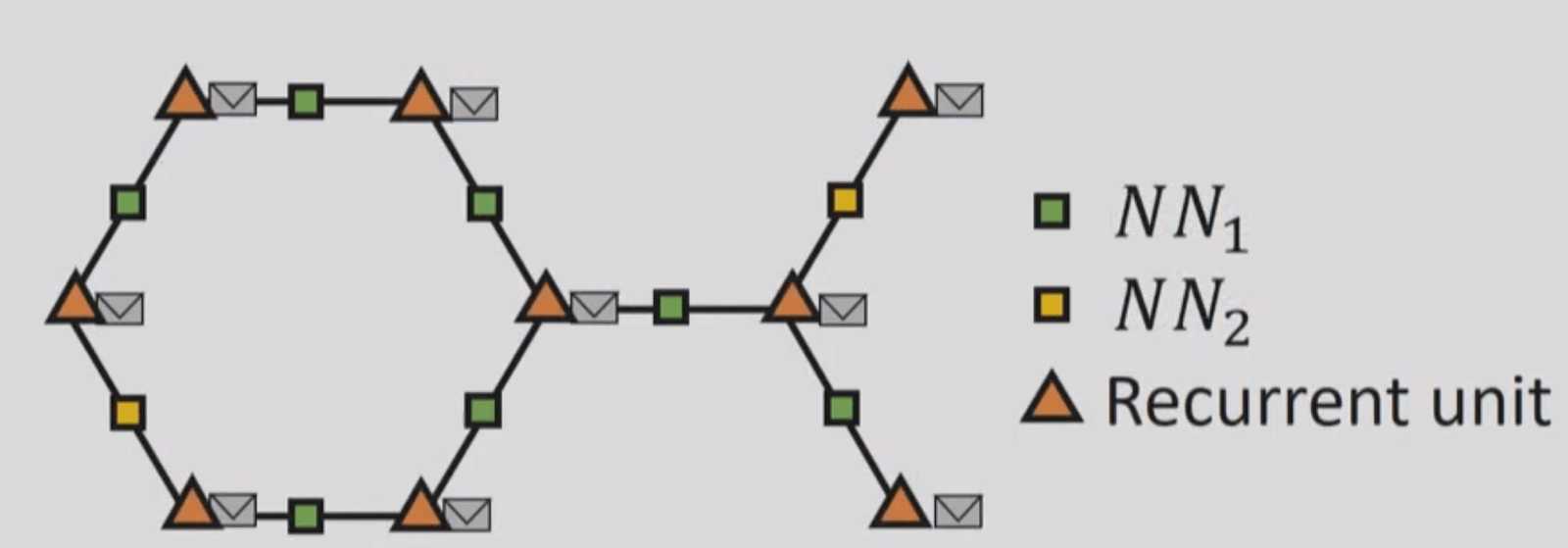

To visualize this concept, consider the following representation of a GNN:

In this architecture, each node in the graph (represented by orange triangles) is replaced by a recurrent unit. The edges of the graph are also represented by neural networks that capture the information associated with those edges, including their weights.

The Learning Process

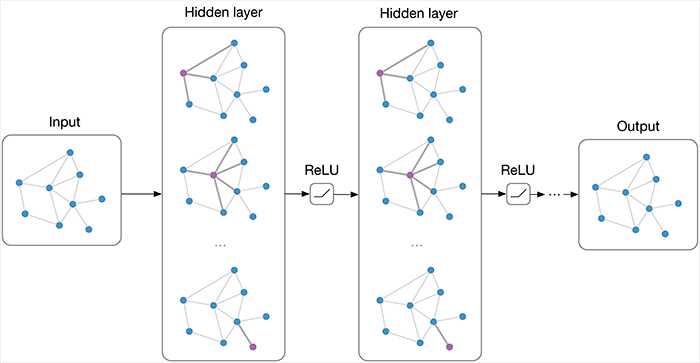

The learning process in a GNN unfolds as follows: at each time step, each node aggregates the embeddings from its neighboring nodes, computes their sum, and passes this information, along with its own embedding, to the recurrent unit. This unit then generates a new embedding that incorporates information from the node and all its immediate neighbors. As the process iterates, each node gradually accumulates information from its second-order neighbors and beyond, until every node is informed about all other nodes in the graph.

The final step involves collecting all the embeddings and aggregating them to produce a single embedding that encapsulates the entire graph. This embedding can then be utilized in various downstream tasks such as classification, prediction, or clustering.

Applications and Experimentation with GNNs

The potential applications of Graph Neural Networks are extensive. They can be applied in fields such as social network analysis, recommendation systems, drug discovery, and even in analyzing transportation networks. If you’re interested in experimenting with GNNs, there are several frameworks available, each with its own strengths. For instance, libraries like PyTorch Geometric and DGL (Deep Graph Library) offer robust documentation and community support, making them excellent choices for both beginners and seasoned practitioners.

Conclusion

Graph Neural Networks represent a significant advancement in our ability to learn from complex, unstructured data. By effectively capturing the relationships and interactions within graph data, GNNs open up new avenues for research and application across various domains. As we continue to explore the capabilities of GNNs, the possibilities for innovation and discovery are boundless.

If you’re eager to dive deeper into the world of deep learning and GNNs, consider exploring resources such as the Deep Learning in Production Book, which provides insights into building, training, deploying, and maintaining deep learning models.

Disclosure: Please note that some of the links above might be affiliate links, and at no additional cost to you, we will earn a commission if you decide to make a purchase after clicking through.

With the right tools and knowledge, you can harness the power of Graph Neural Networks and contribute to the exciting future of machine learning.