The Rise of Deepfakes: Understanding the Technology and Its Implications

In recent years, the proliferation of fake news has emerged as a significant threat to society, fueled by the rapid dissemination of false information through social media platforms. This phenomenon not only affects public opinion but also influences critical decision-making processes. Compounding this issue is the challenge posed by advanced artificial intelligence technologies, which struggle to accurately identify fake data. One of the most alarming developments in this realm is the advent of "Deepfake" technology, which allows for the manipulation of images and videos by swapping faces. While Deepfakes have often been used for comedic purposes—such as placing celebrity faces in humorous scenarios or making politicians appear to say ridiculous things—the potential applications of this technology extend far beyond entertainment, particularly in industries like film and media.

How Do Deepfakes Work?

To understand Deepfakes, we must delve into the underlying technology that powers them: Generative Adversarial Networks (GANs). Introduced in 2014, GANs consist of two competing neural networks—the generator and the discriminator—trained simultaneously. The generator creates synthetic data, while the discriminator evaluates its authenticity. This adversarial process leads to the generation of increasingly realistic images, making it difficult for even human observers to distinguish between real and fake.

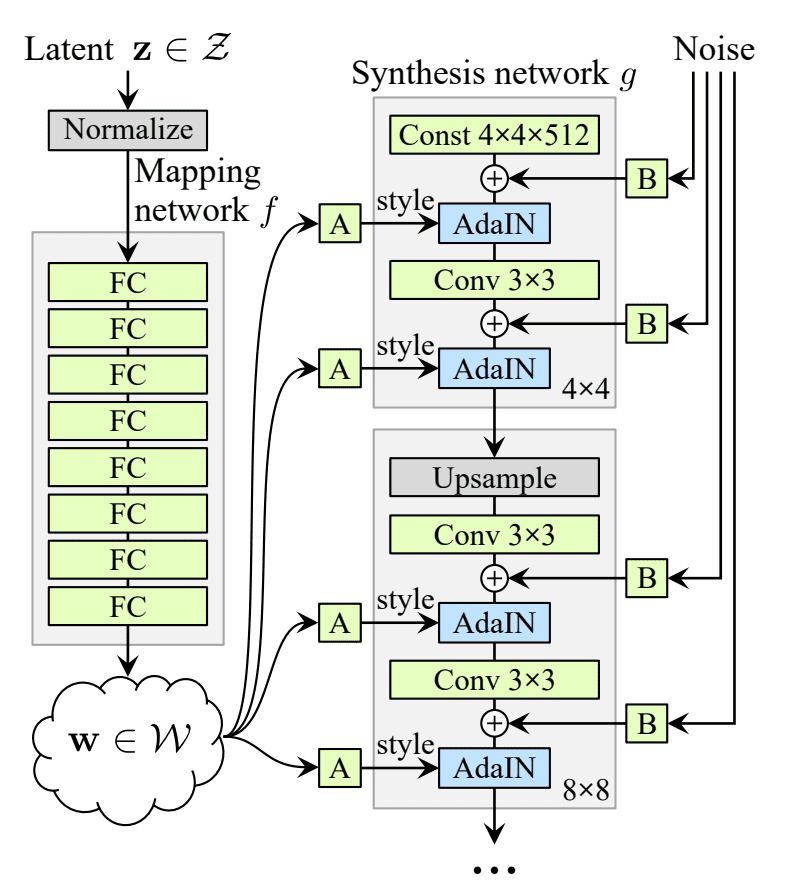

One of the most notable GAN models is StyleGAN, which has gained recognition for its ability to produce high-quality, realistic images. StyleGAN allows for control over various aspects of image generation, enabling the creation of entirely new faces that do not exist in reality. The architecture of StyleGAN is designed to separate high-level attributes (such as identity and pose) from stochastic variations (like freckles and hair), allowing for intuitive manipulation of the generated images.

What Are Deepfakes?

According to Wikipedia, Deepfakes are synthetic media in which a person in an existing image or video is replaced with someone else’s likeness. While the concept of injecting a fake person into an image is not new, recent advancements in GAN technology have revolutionized the methods used for facial manipulation. Deepfake techniques can be categorized into three primary areas:

- Face Synthesis: The creation of entirely new, realistic faces using GANs.

- Face Swap: The replacement of one person’s face with another in images or videos.

- Facial Attributes and Expression: Modifying facial features such as hair color, age, gender, and emotional expressions.

Face Synthesis

Face synthesis focuses on generating non-existent, realistic faces. StyleGAN is a leading approach in this category, utilizing a generator architecture that learns to separate high-level attributes without supervision. This allows for precise control over the synthesis process, enabling the generation of faces that appear convincingly real.

To detect synthetic images, researchers have developed various methods. For instance, some studies employ attention layers on top of feature maps to identify manipulated regions of the face, providing a binary decision on whether an image is real or fake.

Face Swap

Face swapping has gained immense popularity, particularly in the realm of social media. This technique involves replacing a person’s face in a video or image with that of another individual. The FaceForensics++ dataset is a notable resource in this area, containing both real and manipulated videos created using computer graphics and deep learning methods.

The FaceSwap application, for instance, employs face alignment and image blending techniques to achieve realistic face swaps. It utilizes autoencoders—neural networks designed to learn efficient representations of data—to reconstruct images of the source and target faces.

As the technology evolves, so do the methods for detecting swapped faces. Initiatives like the Deepfake Detection Challenge (DFDC), backed by major tech companies and academic institutions, aim to spur innovation in detection technologies. Most detection systems utilize Convolutional Neural Networks (CNNs) to identify distinguishing features or "fingerprints" left by GAN-generated images.

Facial Attributes and Expression

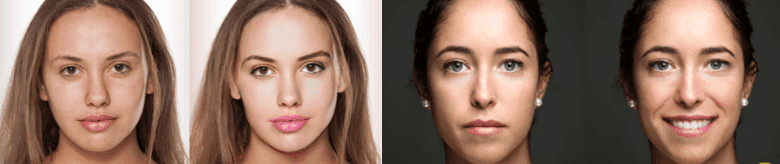

Manipulating facial attributes and expressions involves altering features such as hair color, skin tone, age, and emotional expressions. Applications like FaceApp have popularized this type of manipulation, allowing users to transform their appearance with a few taps on their smartphones. Techniques such as StarGAN enable image-to-image translation across multiple attributes, streamlining the manipulation process.

Conclusion

The rise of Deepfake technology presents both exciting opportunities and significant challenges. While industries like film and media can leverage these advancements for creative purposes, the potential for misuse raises ethical concerns. As we navigate this complex landscape, it is crucial to develop robust detection methods to safeguard against the harmful effects of manipulated media. For those interested in exploring this topic further, a wealth of resources and datasets are available, including curated lists on platforms like GitHub.

References

- Karras, T., Laine, S., & Aila, T. (2019). A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 4401-4410).

- Tolosana, R., Vera-Rodriguez, R., Fierrez, J., Morales, A., & Ortega-Garcia, J. (2020). Deepfakes and Beyond: A Survey of Face Manipulation and Fake Detection. arXiv preprint arXiv:2001.00179.

- Chollet, F. (2017). Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1251-1258).

- Choi, Y., Choi, M., Kim, M., Ha, J. W., Kim, S., & Choo, J. (2018). Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 8789-8797).

- Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., … & Bengio, Y. (2014). Generative adversarial nets. In Advances in neural information processing systems (pp. 2672-2680).

- Afchar, D., Nozick, V., Yamagishi, J., & Echizen, I. (2018, December). Mesonet: a compact facial video forgery detection network. In 2018 IEEE International Workshop on Information Forensics and Security (WIFS) (pp. 1-7). IEEE.

- Rössler, A., Cozzolino, D., Verdoliva, L., Riess, C., Thies, J., & Nießner, M. (2019). Faceforensics++: Learning to detect manipulated facial images. In Proceedings of the IEEE International Conference on Computer Vision (pp. 1-11).

As we continue to explore the implications of Deepfake technology, it is essential to remain vigilant and informed, ensuring that we harness its potential responsibly while mitigating its risks.